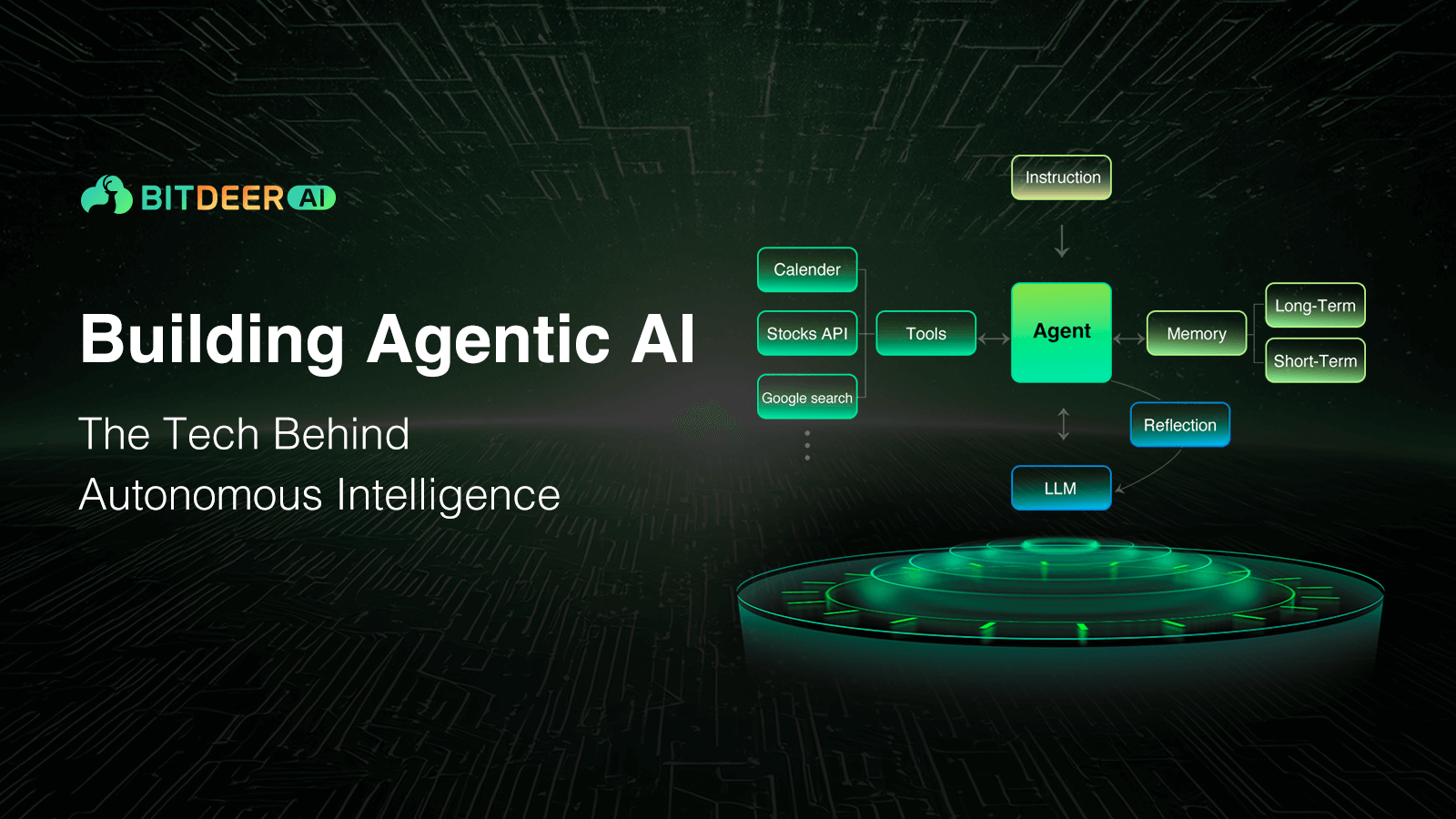

Key Technical Components of Achieving Agentic AI

Agentic AI represents the next evolution in autonomous systems, going beyond reactive language models to perform complex, multi-step tasks with minimal human oversight. In practice, building such systems requires a deep integration of advanced model training strategies and robust data engineering. Today, we’ll explore the core technical components necessary to achieve agentic AI, focusing on how to train models that support iterative, chain-of-thought reasoning and designing database architectures that enable rapid, context-rich data retrieval.

Advanced Model Training Strategies

- Fine-Tuning, and Domain-Specific Adaptation

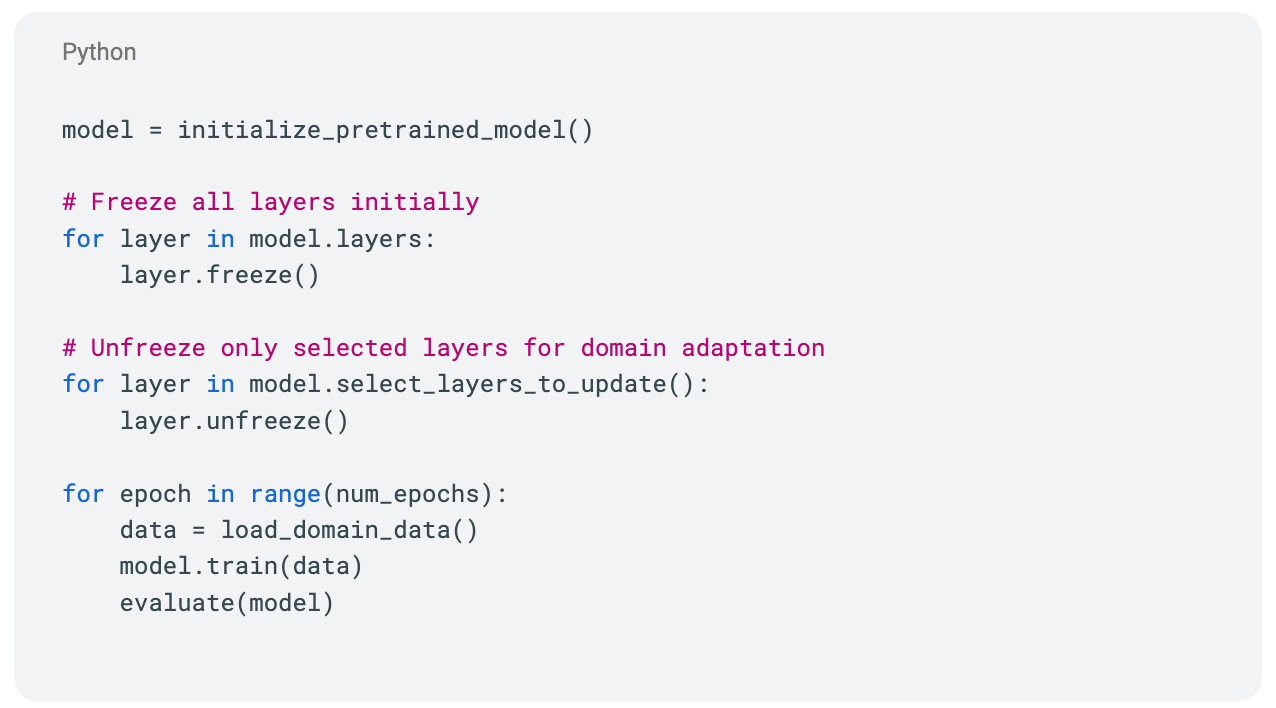

- Transfer Learning with Domain-Specific Data: Transfer learning can involve updating certain existing layers of a pre-trained model using domain-specific data. This method retains much of the model’s foundational knowledge while adjusting select layers to align with the target domain.

- Ideal for tasks where you want the model to adapt to a new domain (like legal, medical, or technical text) without introducing entirely new architecture.

- Benefit: Balances preservation of general knowledge with domain-specific adaptation.

- Reinforcement Learning (RL) for Autonomy:

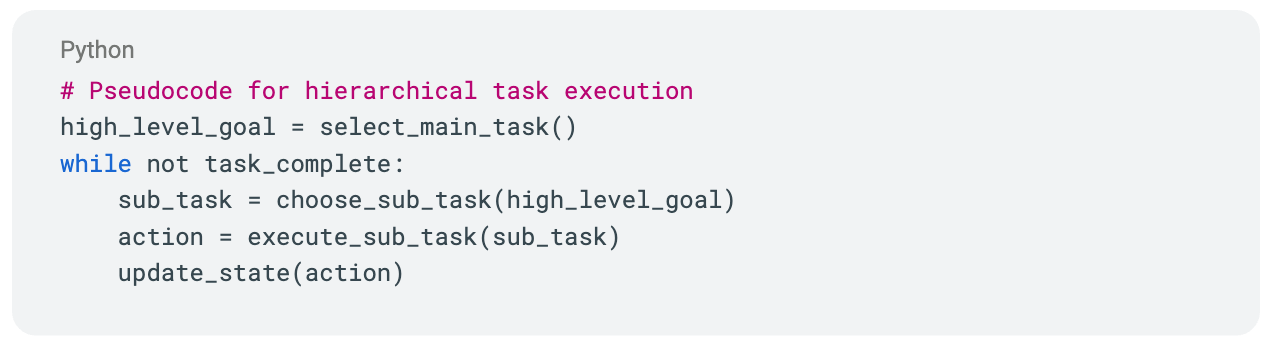

- Hierarchical Reinforcement Learning (HRL): Break complex tasks into sub-goals for long-term planning, such as a robot assembling a product by mastering smaller actions like picking up parts.

- This method structures tasks hierarchically, making it ideal for sequential decision-making in robotics or game AI.

- Applications: Perfect for tasks requiring sustained reasoning over time.

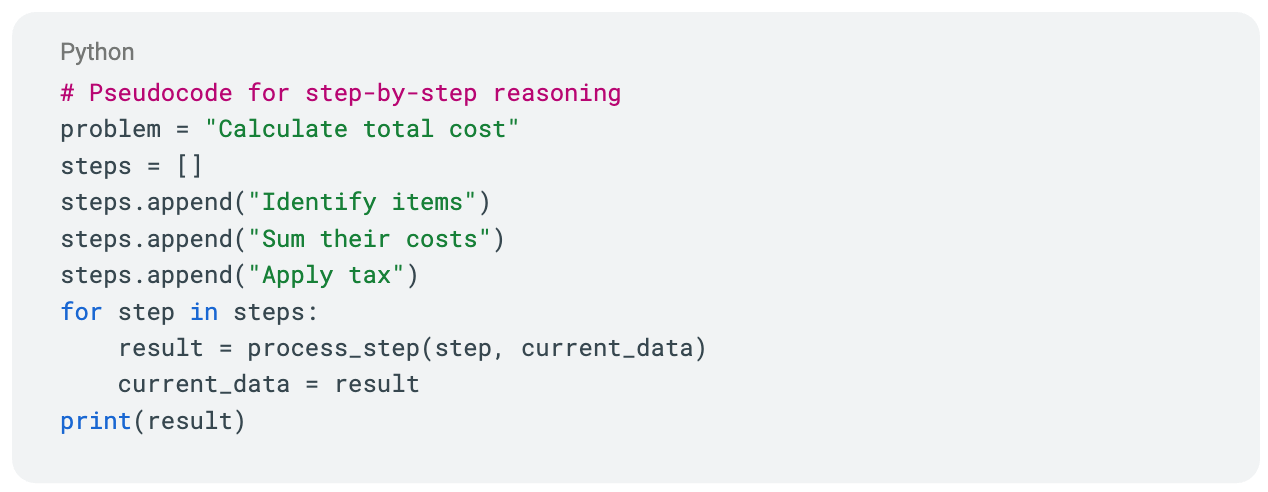

- Reasoning Techniques: Techniques such as ReAct, Chain-of-Thought (CoT), and Tree-of-Thoughts are employed to guide the model through step-by-step reasoning. These methods help the AI break down complex tasks into logical subcomponents and incorporate feedback loops to refine its strategy iteratively.

- ReAct (Reasoning + Acting): Combines reasoning with actions, like API calls, for dynamic environments.

- Chain-of-Thought (CoT): Guides models to solve problems step-by-step, boosting accuracy in complex scenarios.

- Tree-of-Thoughts (ToT): Explores multiple reasoning paths to find the best solution.

- This simulates a structured reasoning process, adaptable to any programming setup.

- Use Case: CoT excels in math or logic problems, while ReAct suits interactive tasks.

- Multi-Modal Integration: To achieve comprehensive situational awareness, modern training strategies incorporate multi-modal data, such as text, images, and sensor data, allowing agents to better perceive and reason about their environment. This integration is critical when agents need to interact with both digital and physical data sources.

Best Practices for Model Optimization

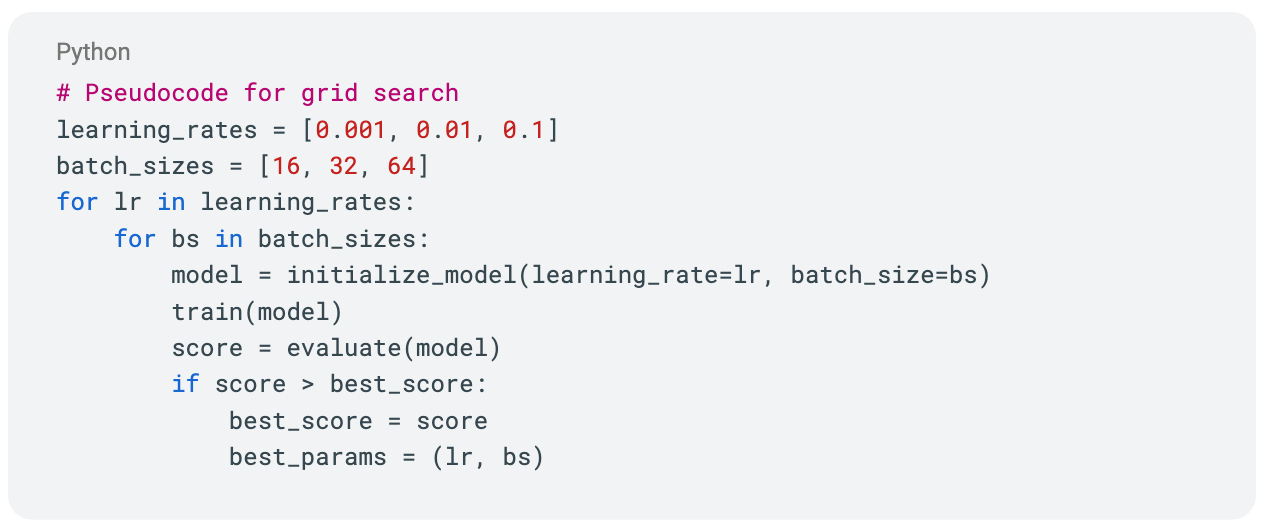

- Hyperparameter Tuning and Transfer Learning: Leveraging techniques such as grid search, Bayesian optimization, or even automated machine learning (AutoML) approaches ensures that the model’s learning rate, batch size, and other hyperparameters are optimized for the specific task.

An example of grid search: Test combinations of parameters like learning rate and batch size to optimize performance.

- Regularization and Robustness Measures: Techniques such as dropout, weight decay, and data augmentation help mitigate overfitting, ensuring that the model generalizes well to new, unseen tasks.

- Iterative Evaluation and Feedback: Incorporating continuous evaluation, both via automated metrics and human-in-the-loop assessments, enables the system to adapt dynamically. This iterative feedback cycle is crucial for maintaining high performance in evolving real-world environments.

Robust Database Setup for Agentic AI

Data Engineering for Real-Time Decision-Making

Data is the lifeblood of agentic AI systems. Designing the underlying data infrastructure involves:

- Multi-Source Data Integration: Agentic AI must access both structured and unstructured data sources. This includes traditional SQL/NoSQL databases, real-time data streams, and even legacy systems. Data ingestion pipelines should be designed for high throughput and low latency.

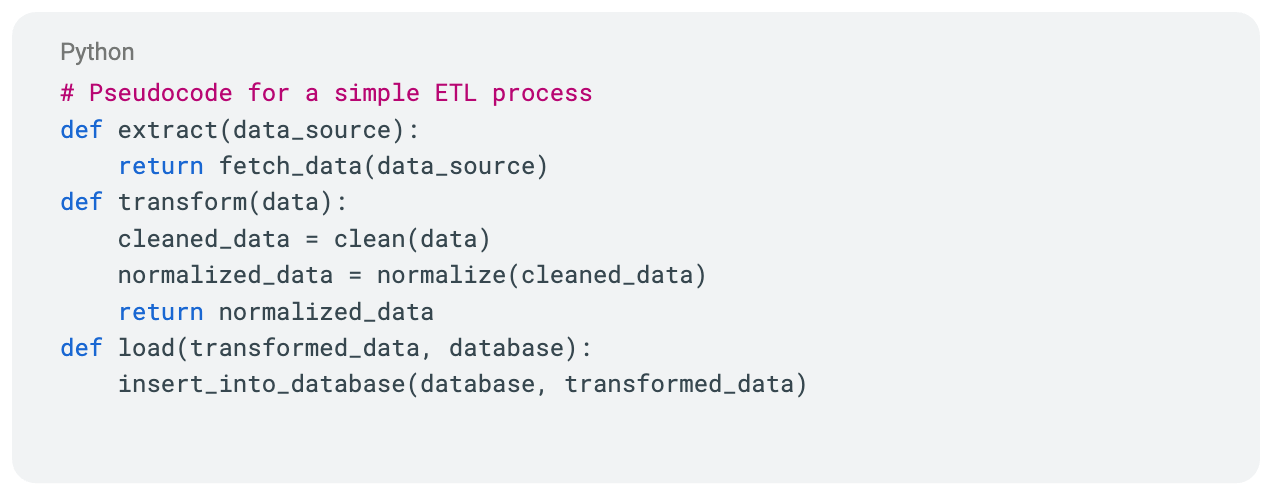

An example of ETL Pipelines: Crate Extract, Transform, Load processes for diverse data types.

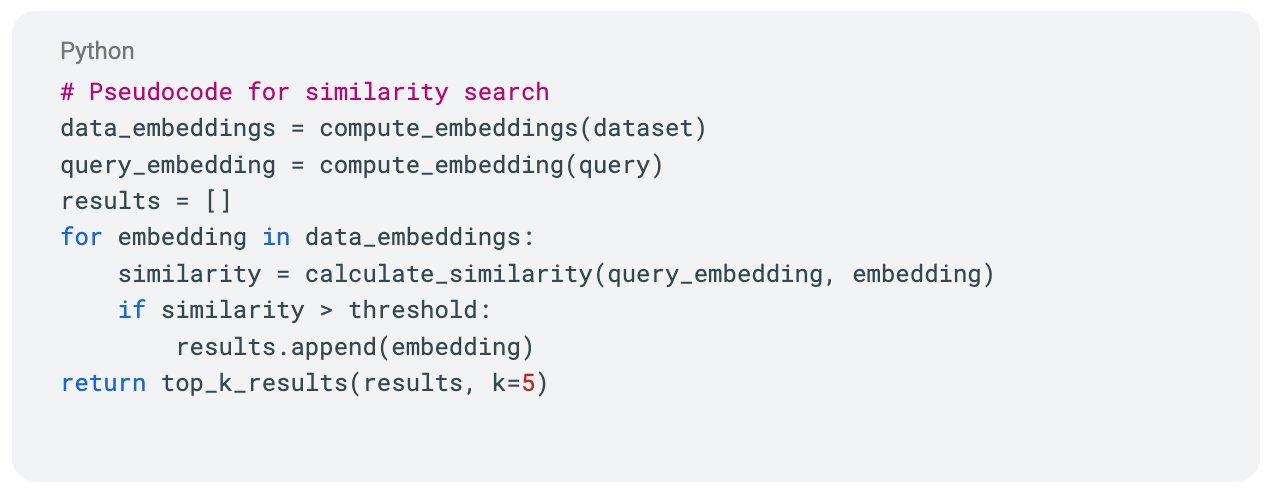

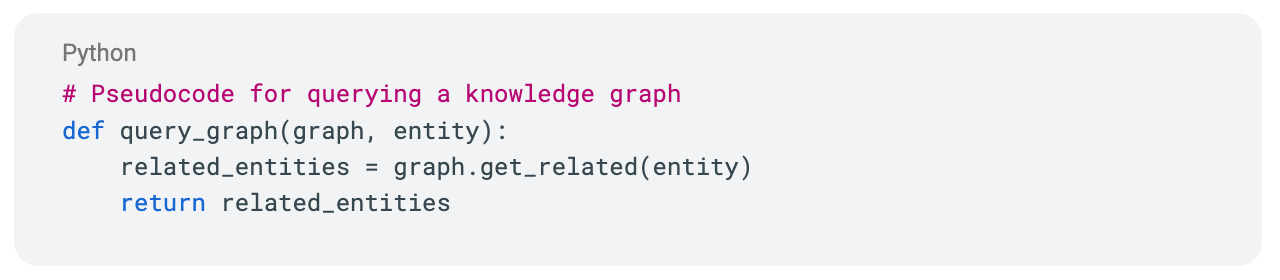

- Vector Databases and Knowledge Graphs: For effective retrieval-augmented generation (RAG) techniques, vector databases store embeddings generated from textual or multi-modal data. Knowledge graphs complement this by providing semantic relationships between entities, enhancing the model’s ability to reason about context and relationships.

An example of Vector Storage: Use embeddings for rapid similarity searches.

An example of Knowledge Graphics: Mao entity relationships to boost reasoning

- Scalable, Distributed Storage: Employing cloud-based storage solutions and distributed databases ensures that data scales with the organization’s needs. Technologies such as Apache Kafka for streaming data or cloud-native databases (like Bitdeer AI RDS) provide the necessary infrastructure to support agentic workflows in real time.

- Data Preprocessing and Normalization: Robust ETL (Extract, Transform, Load) processes are essential to clean, normalize, and standardize data. High-quality, timely data is vital for the model’s performance, especially in contexts where decisions must be made rapidly.

Real-Time Data Retrieval and Updating

- Low-Latency Query Mechanisms: To support real-time decision-making, databases must offer rapid query responses. Indexing strategies, such as those used in vector similarity searches, are critical for retrieving relevant context swiftly.

- Memory Systems and Caching: In agentic AI, persistent memory architectures or in-memory databases can cache frequently accessed data. This ensures that the agent can recall historical context and update its internal state efficiently during long-running tasks.

An example of Caching: Keep frequent data in memory

- Security and Compliance: As agentic AI systems often access sensitive enterprise data, encryption, access controls, and audit logs are essential. Data governance frameworks must ensure compliance with regulatory standards such as GDPR or HIPAA, particularly when the agent operates in domains like healthcare or finance.

Integrating Model Training with Database Architectures

Achieving true autonomy in agentic AI hinges on the seamless integration of advanced model training and robust data infrastructure. Some integration techniques include:

- Feedback Loops Between Model and Data: Models continuously query the database to retrieve context, then update the database with new insights or learned parameters. This bi-directional flow of information allows the agent to maintain situational awareness and improve over time.

Example: The agent updates the database with new insights, triggering retaining.

- Dynamic Data Retrieval in Chain-of-Thought Reasoning: When an agent employs chain-of-thought reasoning, it can dynamically query vector databases or knowledge graphs to retrieve the most relevant context. This empowers the agent to adapt its reasoning process based on fresh data, ensuring that decisions remain up-to-date.

Example of Approach: Query the database per reasoning step for fresh context:

- Modular System Architecture: By decoupling the model training and data management layers, engineers can update either component independently. This modularity is vital for maintaining system agility as new data sources become available or as models are further refined.

Challenges and Future Directions

Despite these advancements, challenges remain in harmonizing model training and database systems:

- Scalability vs. Latency Trade-offs: Balancing the scalability of distributed databases with the need for low-latency responses is an ongoing technical challenge.

- Continuous Data Quality Assurance: Ensuring that incoming data is consistently high quality is essential for maintaining model performance, especially when dealing with real-time streams.

- Security and Ethical Considerations: Integrating advanced AI with sensitive data requires stringent security protocols and ethical frameworks to prevent misuse and protect user privacy.

Looking ahead, innovations in neuromorphic computing and adaptive, self-healing databases may further enhance the synergy between model training and data management in agentic AI systems. Building agentic AI systems that autonomously plan, reason, and act demands a seamless integration of advanced model training techniques with robust, real-time database architectures.