Quick Deployment of DeepSeek-R1

The Bitdeer AI Cloud platform now supports multiple versions of the DeepSeek models including R1 and JanusPro. You can deploy an instance with a single click via the instance console, customize the management mode, and flexibly choose the model image for rapid deployment. This guide uses the DeepSeek-R1 671B image as an example to provide a detailed, step-by-step tutorial, ensuring optimal performance and scalability.

Quick Overview

DeepSeek-R1 is an advanced open-source AI inference model that was released on January 20, 2025, boasting performance comparable to OpenAI’s o1 model. Optimized for high-performance natural language processing and generative AI tasks—including math, code, and reasoning. It leverages innovative inference techniques that surpass conventional large language models with much less computing resource required.

- Model Details: DeepSeek R1 671B (2.51 bit quantization)

- GPU Requirements: VRAM ~300GB (Recommended: 4 * H100 GPUs)

- System Disk: >400GB

- Security Group Settings:

- API Port: 40000

- SSH Keypair: Ensure an SSH keypair is ready for secure access.

(For more information, please refer to step-by-step instructions below)

Operation Steps

Install DeepSeek

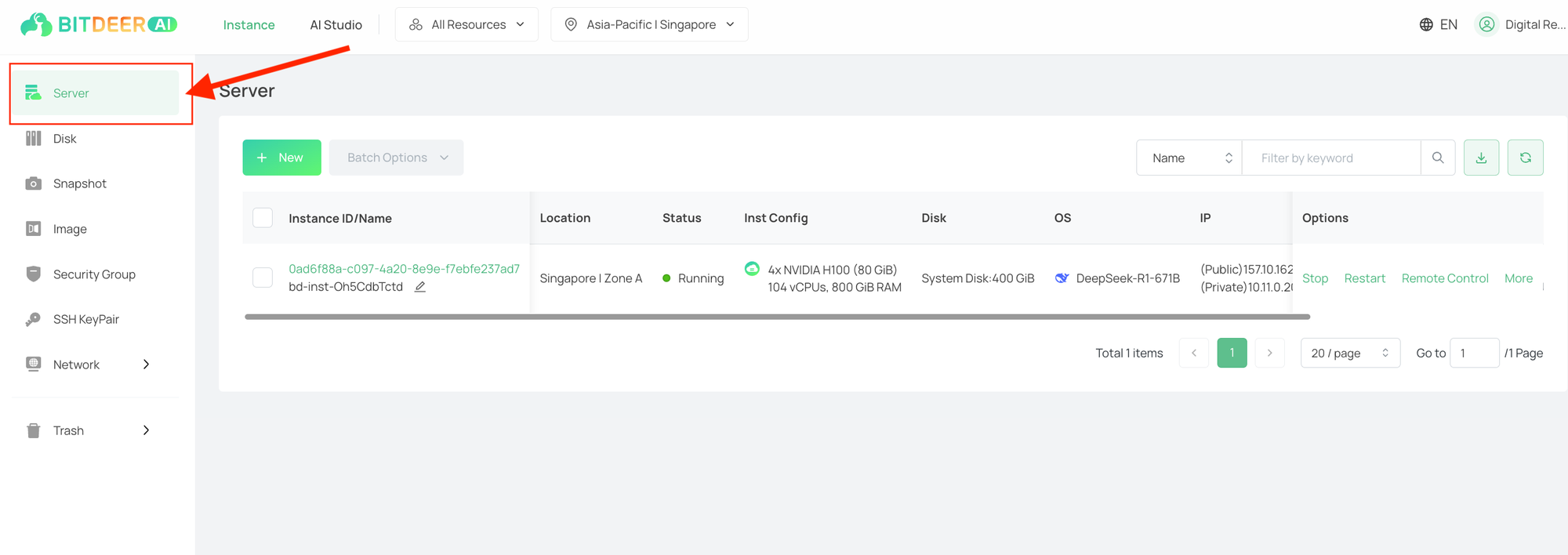

- Sign up or log in to the Instance Console:Access your cloud server console using your credentials.

- Navigate to the Server Section:In the left-side navigation panel, select “Server” to open the cloud server list page. Then click “New” to create a new instance.

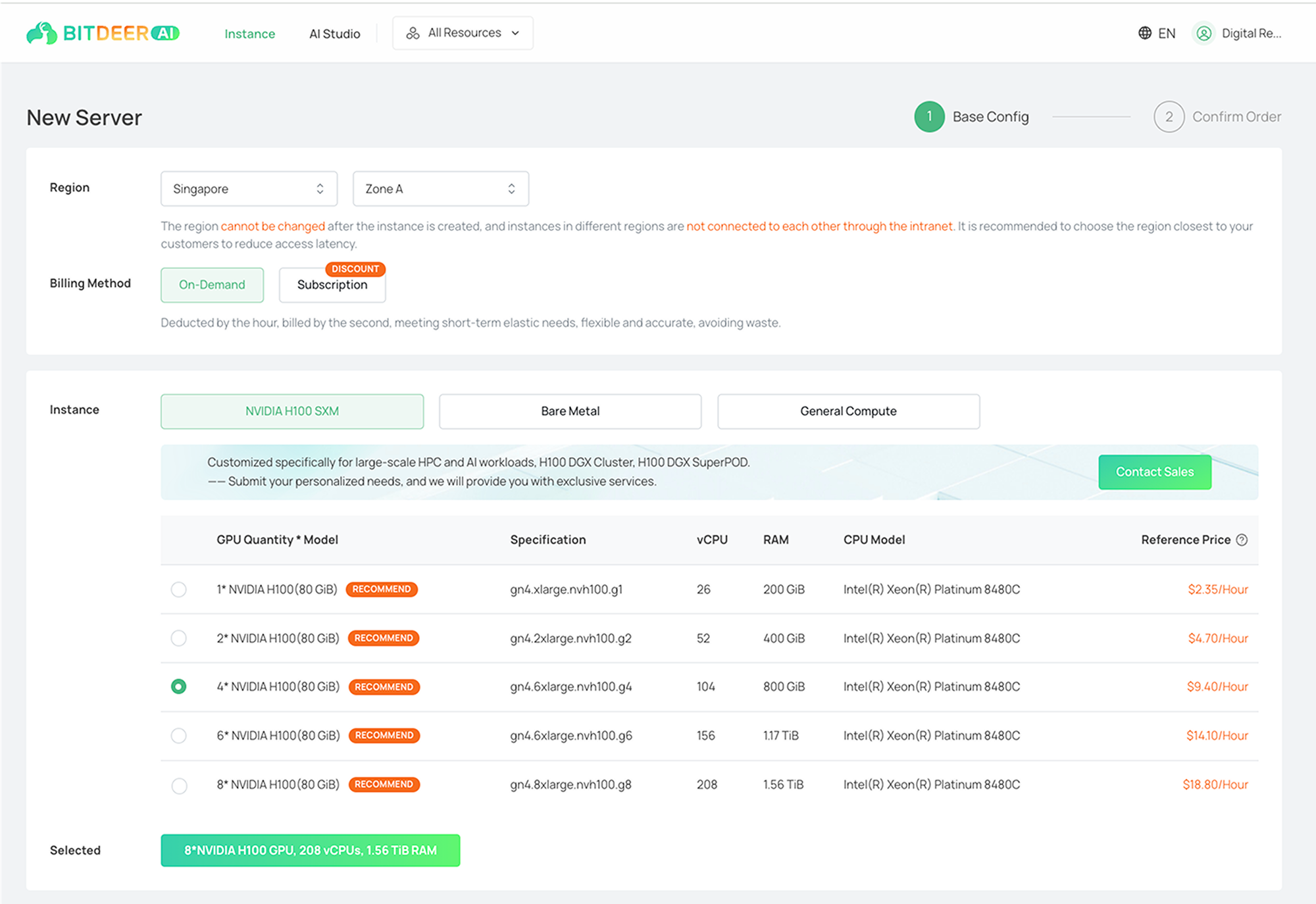

3. Configure your cloud server (virtual machine):

- Select the your desired region and available zone, as well as the billing method.

- Under Instance selection, it is recommended to use 4 * NVIDIA H100(80 GiB) to run DeepSeek-R1.

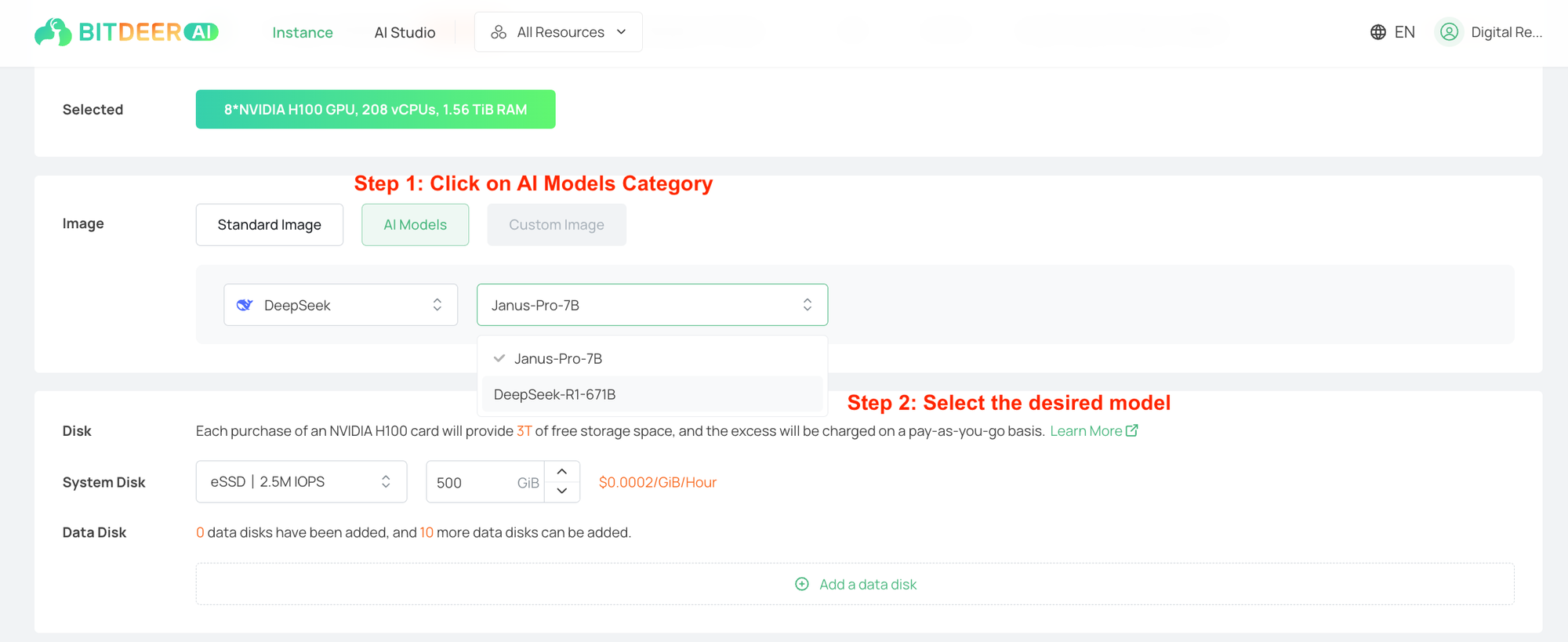

c. Under Image, choose the AI Model category and then select the specific model DeepSeek-R1 671B.

d. Under System Disk, this image requires at least 400GB of memory.

e. For Network and Public IP Address sections, you can use the default configurations.

f. Under Security Group, use a security group that allows inbound TCP access to port 40000 for API access. Refer here for more information on how to set up a security group.

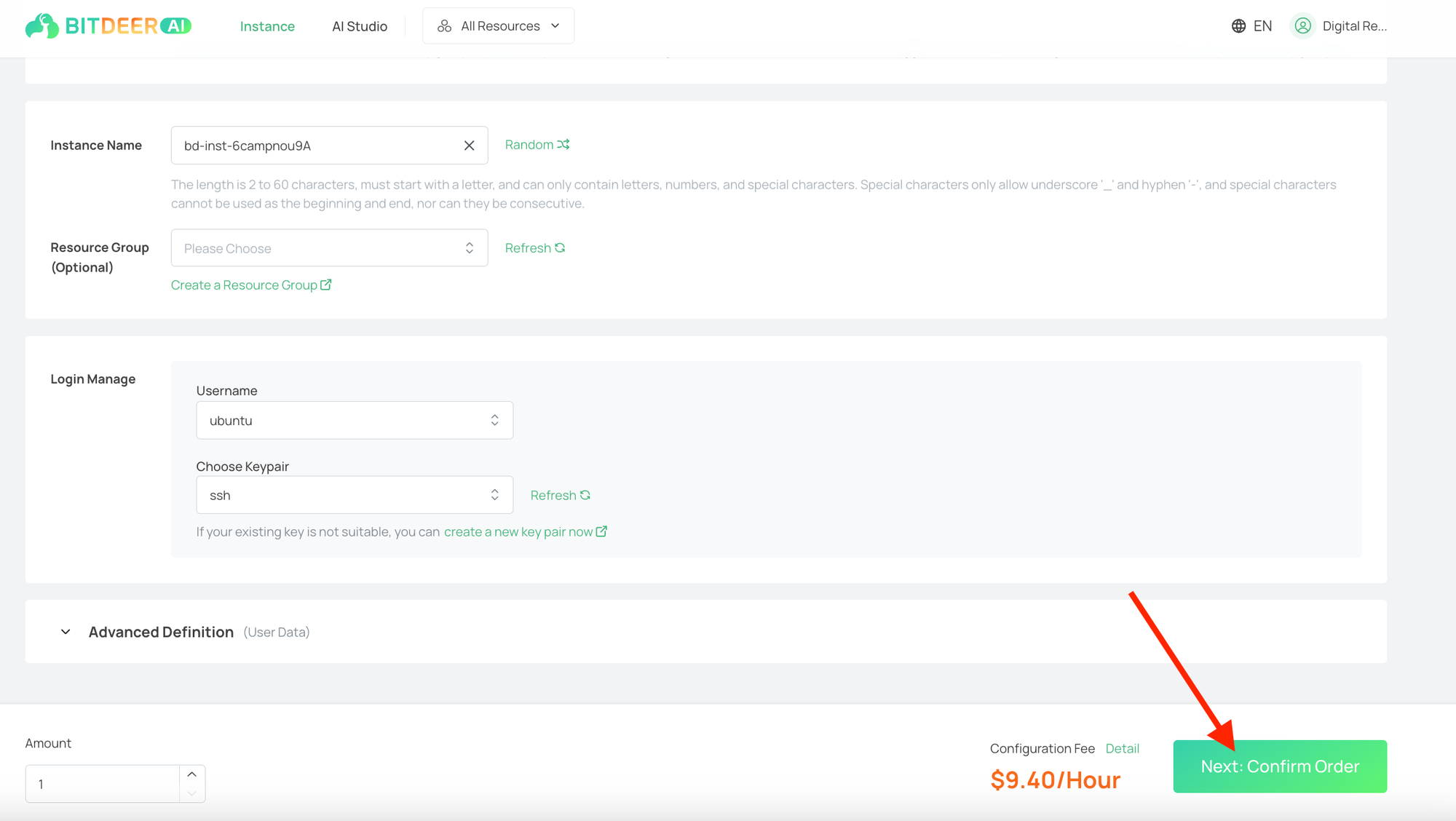

g. For Instance Name and Resource Group, you could use the default values, or configure your own settings as necessary.

h. Under Login Management, you could use existing keypair or create a new one if this is your first-time usage. Refer to this article on how to create SSH keypair.

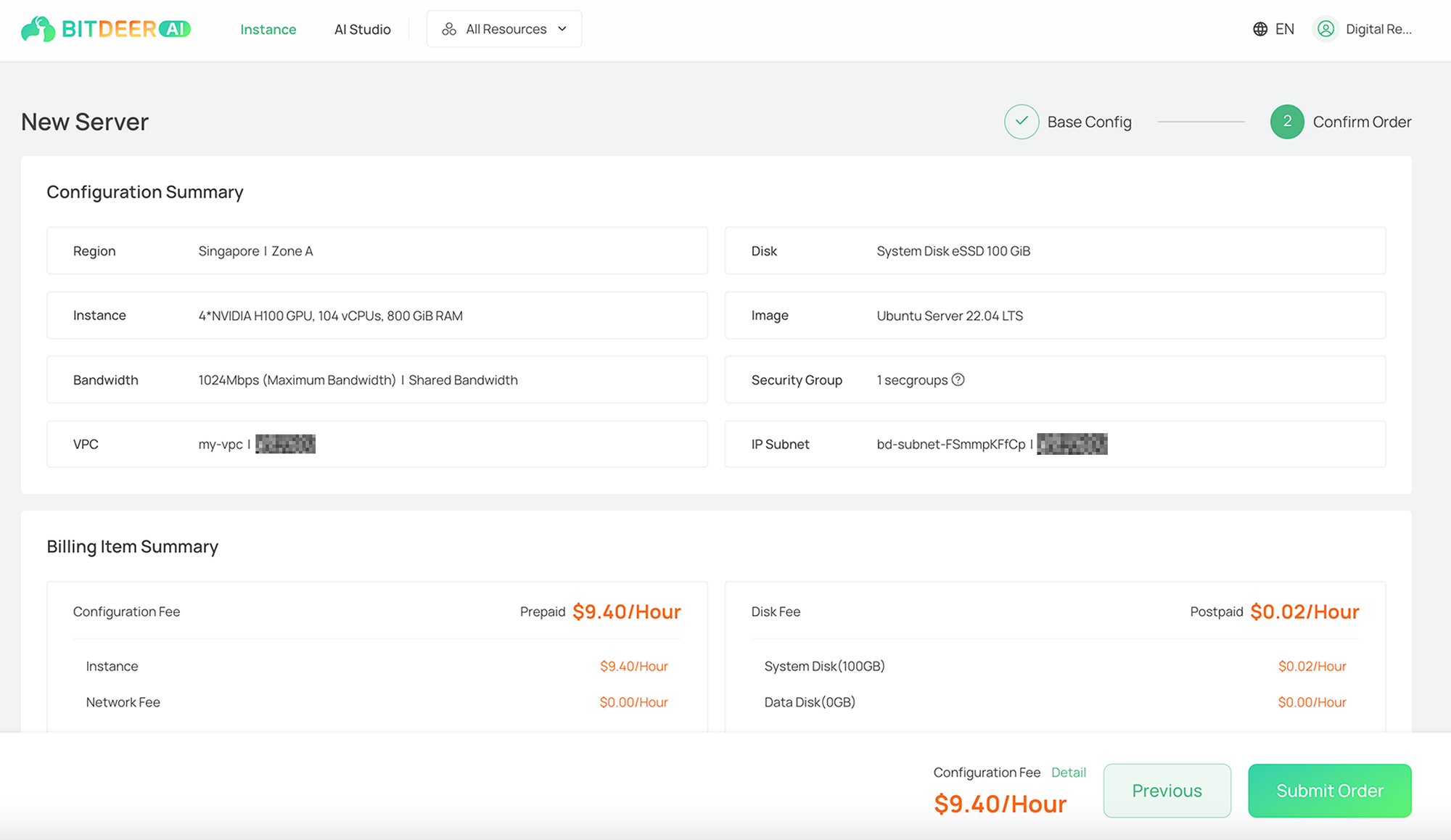

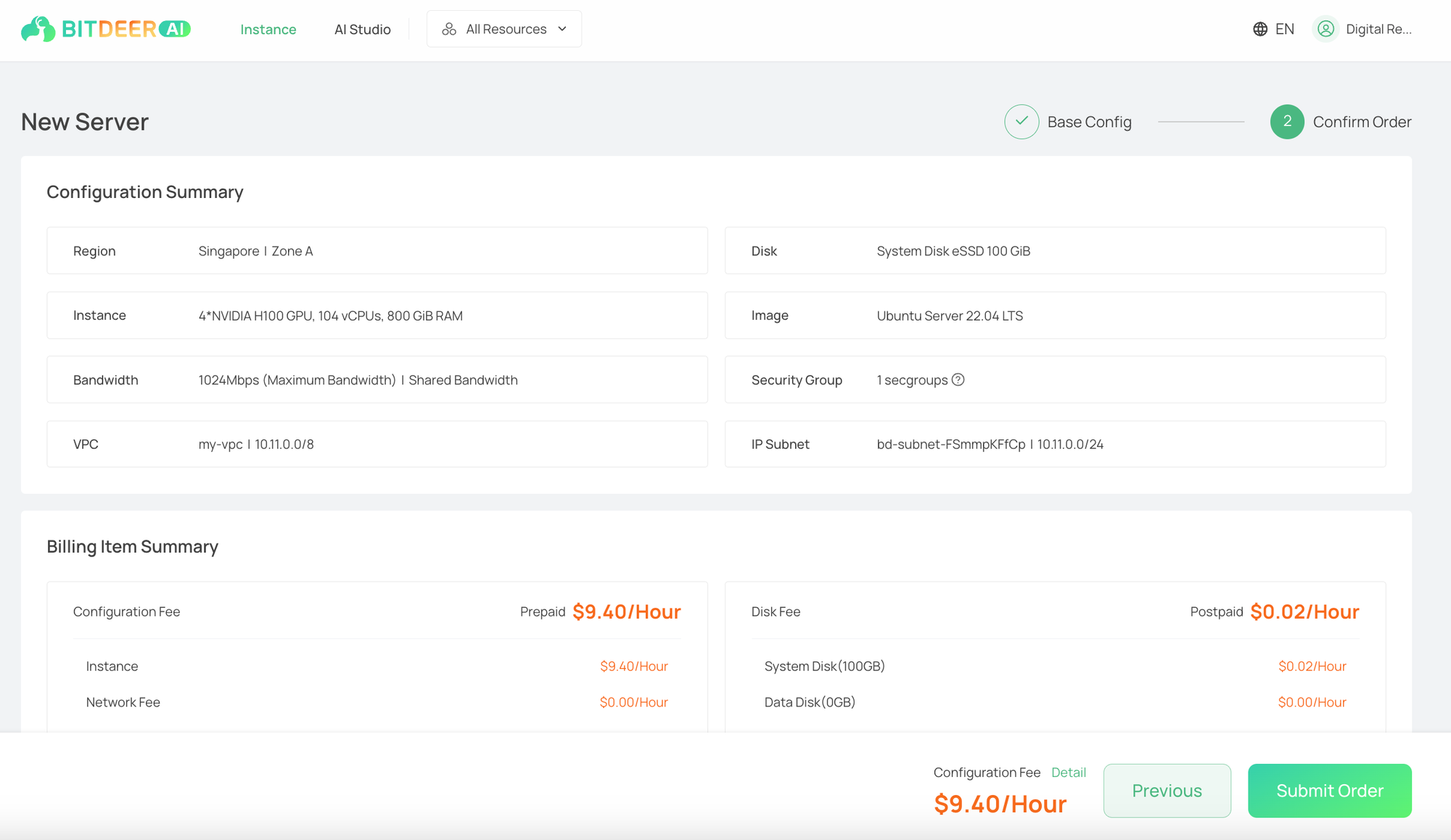

i. Click on “Next: Confirm Order” to proceed

j. Verify your cloud server configuration. If everything is correct, click on “Submit Order” after reading and agreeing to agreements.

k. Confirm the payment and your cloud server will be ready soon.

4. Automatic Deployment:

- Once you complete the configuration steps, the system will automatically deploy the model and start your server.

- Since the model size is large, the instance will take >30 minutes to set up. You will receive a notification email once the server starts to run.

- Please ensure that the server status is Running before you start to use it.

5. Since the server will start to download the DeepSeek model upon running, it is recommended to wait for at least another 20 minutes before using it.

6. You can refer to the Public IP address within the Cloud Server details page.

Using DeepSeek on Cloud Server

- You can use DeepSeek-R1 with in the following ways:

a. On Web UI: Directly visit http://<Public-IP>:40000 to make use of the web interface to chat with DeepSeek-R1.

b. Using API: Since the API adheres to OpenAI RESTful API standards, you could start to make inference API calls with on http://<Public-IP>:40000/api/chat/completions or other relevant endpoints

Troubleshooting

- Q: I try to curl my public IP address with proper port configuration. However, I see this message: The model is loading. Please wait.The user interface will appear soon.A: In this case, you can monitor the model loading process with methods below.

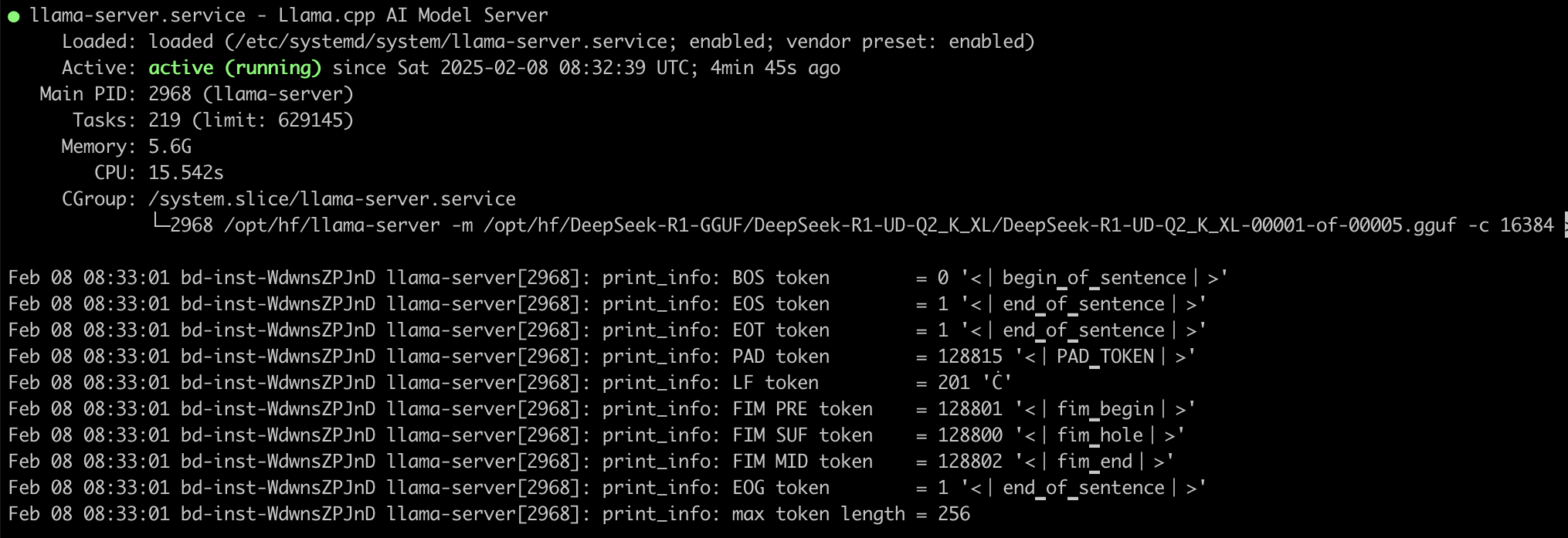

- SSH into your server (make sure that your security group allows access to port 22 with TCP protocol for SSH)

- You could use the root account using `sudo su` command. Afterwards, run `systemctl status llama-server` to see if the server is running. It should show Active: active (running)

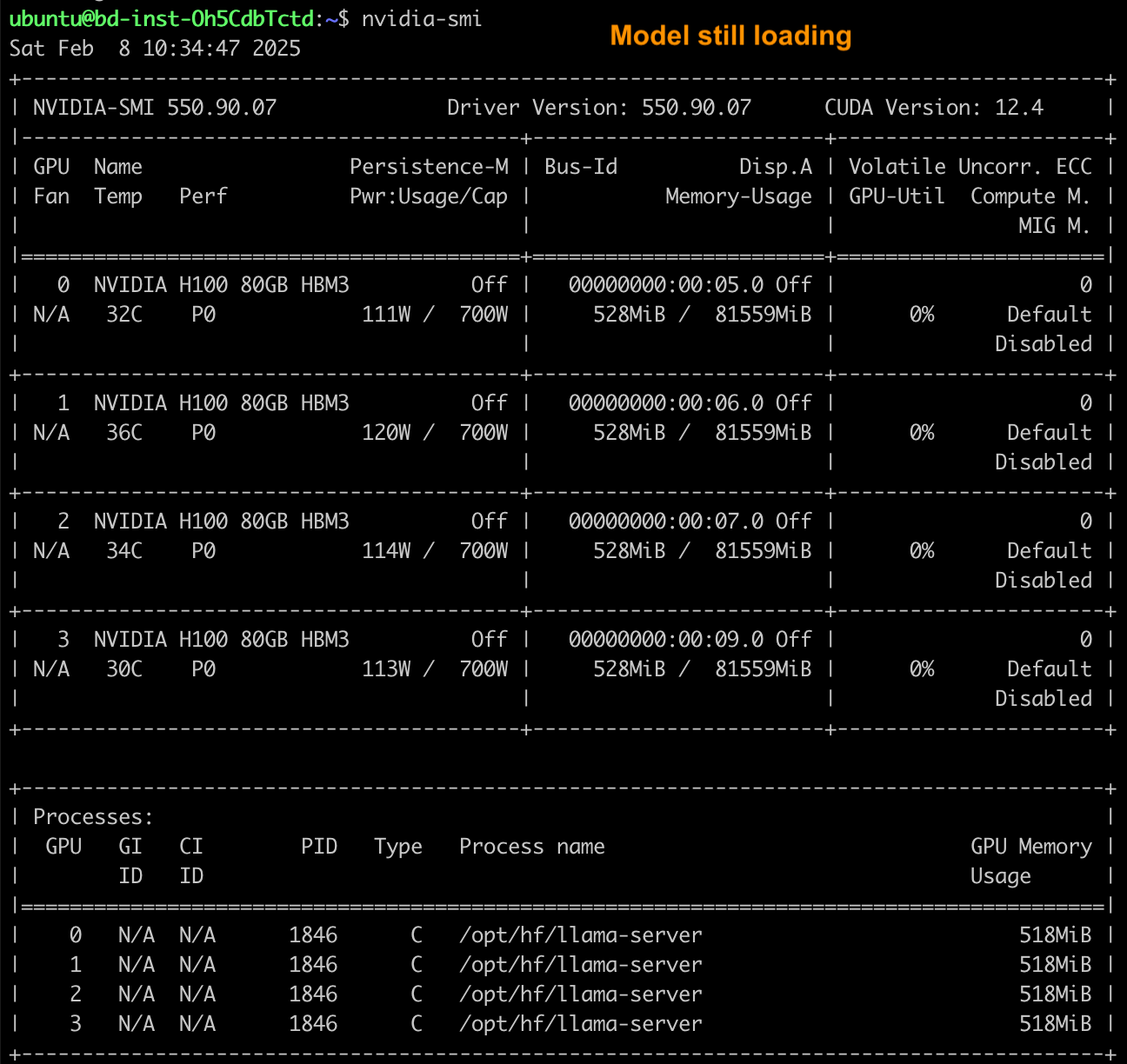

3. Run `nvidia-smi` to see if the VRAM of each H100 GPU is being occupied. The VRAM should take around ~300GB in total once the model is fully loaded.

Why Choose Bitdeer AI Virtual Machine?

Bitdeer AI’s virtual machine services offer several advantages for AI workloads, including:

- High-Performance Computing: Access to NVIDIA H100, H200, and other high-end GPUs for AI training and inference.

- Scalability: Easily scale resources based on workload demands.

- Optimized Networking: Low-latency, high-bandwidth interconnects for faster data transfer and reduced training times.

- Flexible Deployment Options: Choose between bare metal servers for dedicated performance or virtual machines for cost-effective, on-demand AI processing.

- Secure and Reliable Infrastructure: Enterprise-grade security and 24/7 support to ensure seamless operations.

Deploying DeepSeek-R1 on Bitdeer AI’s virtual machine infrastructure provides a powerful, scalable, and cost-effective solution for AI model training and inference. By following this guide, you can set up and run DeepSeek-R1 efficiently, taking advantage of Bitdeer AI’s high-performance computing resources for your AI projects.