Training Jobs

Overview

Training Jobs enable users to conduct distributed training and fine-tuning of machine learning models efficiently. It allows for seamless scaling from a single machine to a large-scale distributed setup using APIs and interfaces. It is highly extensible and portable, supporting deployment across various cloud environments and integration with diverse ML frameworks. It also leverages advanced scheduling techniques to optimize resource usage and cost savings. The operator supports custom resources for different ML frameworks, such as PyTorch, TensorFlow and MPI.

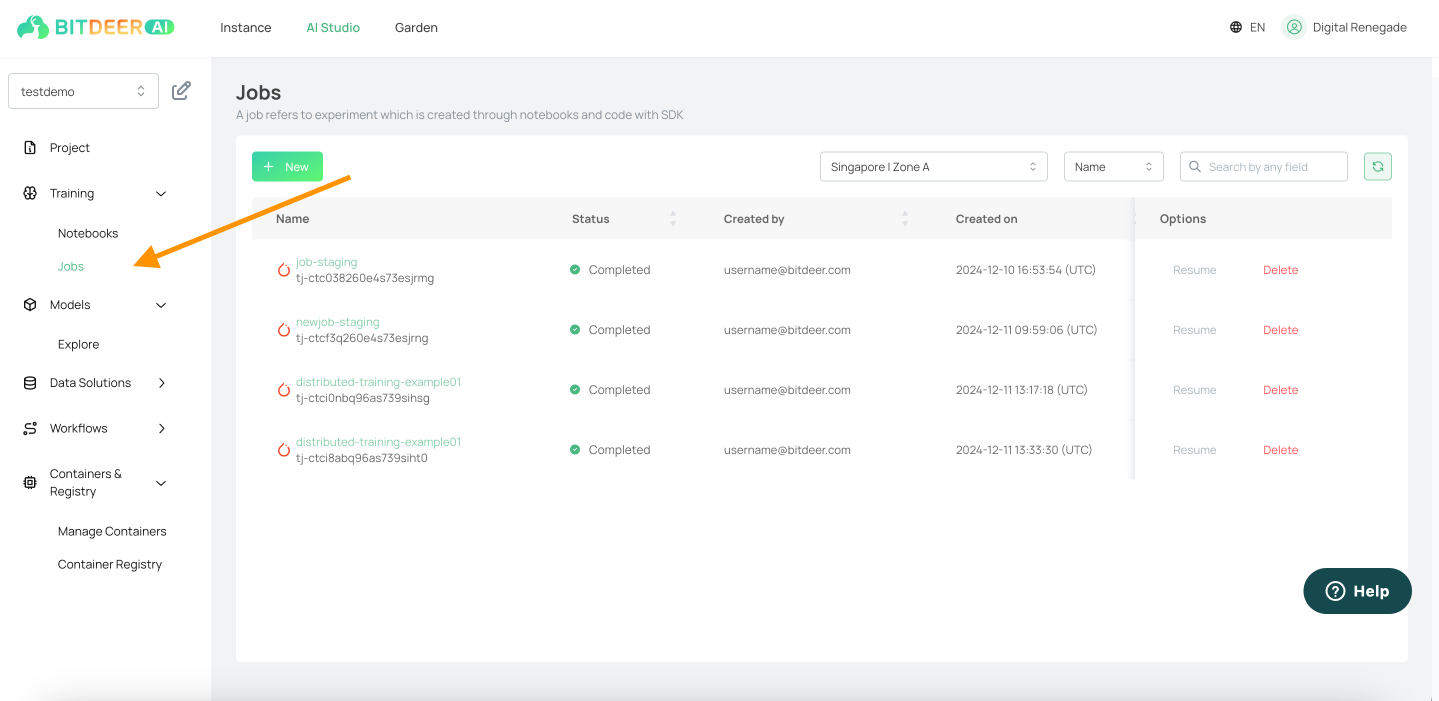

You can find the Training Job feature under AI Studio here.

Other than web interface, please refer to SDK documentation here, or CLI documentation here.

Other info

In AI Studio, machine learning workloads typically fall into one of two broad categories:

- Interactive Sessions:

In this scenario, a data scientist opens an interactive environment. This can be fulfilled by either container terminal sessions, or our cloud Jupyter notebooks. This hands-on approach allows the Machine learning engineers or data scientists to iteratively develop, debug, and refine models in real time. - Training Jobs:

Here, a data scientist packages the necessary code, data, and configurations into a self-contained workload and submits it for execution – which can run on distributed scale over multiple worker or machines. Once the process starts, it runs without continuous human intervention. The data scientist can then periodically check logs, metrics, and outputs after the training concludes, rather than actively monitoring or interacting with the process.