Submit Training Job

You can submit a training job using the web interface, SDK or CLI. This user guide mainly covers the web experience. You can refer to SDK documentation here, or CLI documentation here. This guide mainly covers the usage on web portal.

Operation Scenario

Submit a training job to run containerized workload on multiple worker machines.

Prerequisites

- Register a Platform Account:

Create a Bitdeer AI account. - Complete KYC Verification:

When prompted, fulfill the KYC (Know Your Customer) requirements by providing your real-name information to ensure compliance and security. - Prepare Your Containerized Workload:

Ensure that your workload has been packaged into a container image and is publicly accessible through a container registry (e.g., Docker Hub).

Operations

- Log in to use AI Studio Console.

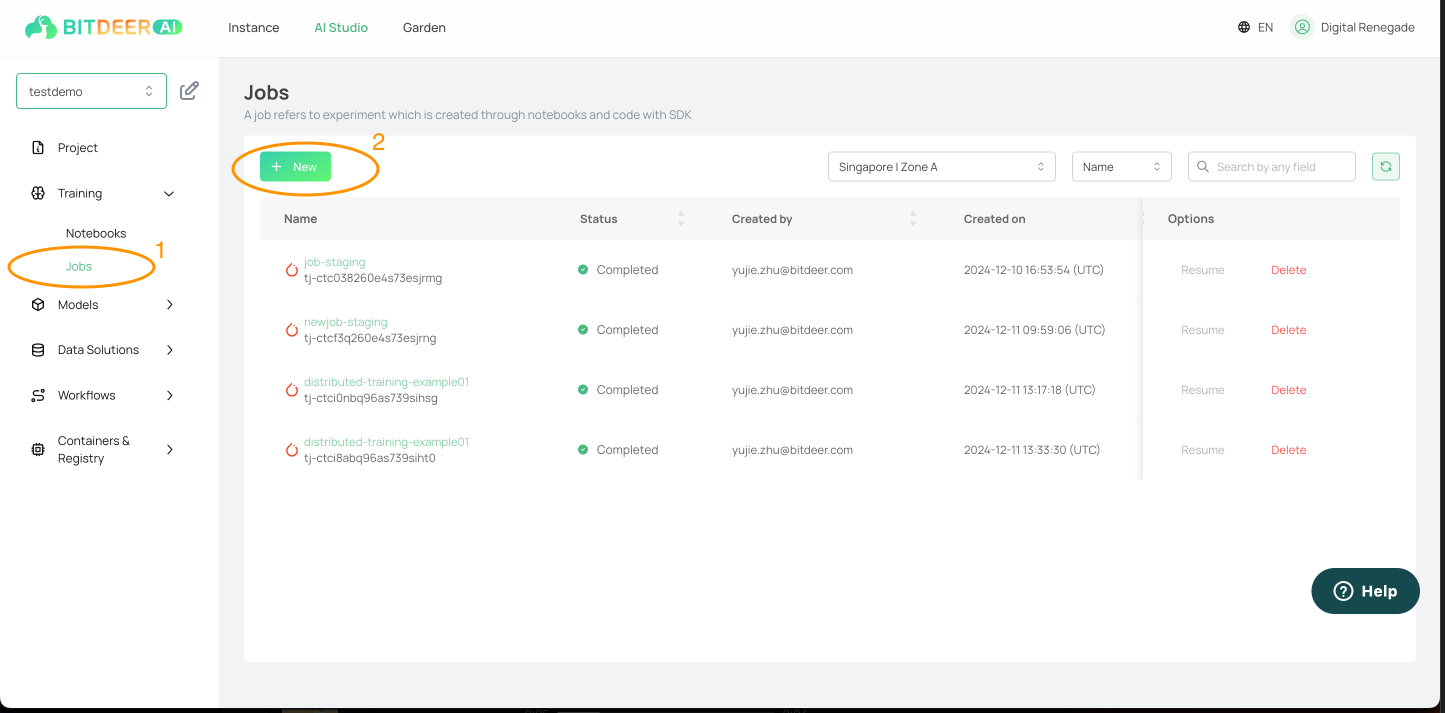

- In the left navigation tree, expand "Training" and select "Jobs".

- Click on "New" to proceed to creating a new training job. Configure the following information according to the page prompts.

| Configuration parameters | Configuration instructions |

|---|---|

| Region |

- Region: It is recommended to choose the region closest to your customer, which can reduce access delay and improve access speed. - Available zone: If you need to purchase multiple cloud servers, it is recommended to choose different available areas to achieve disaster tolerance. For more information, please refer to Regions and Available Areas. |

| Image | Specify the containerized workload image to be applied to containers on different workers |

| Job Type |

- PyTorch Job:You are responsible for writing your training code using the native PyTorch Distributed APIs and specifying the required number of workers and GPUs as part of the training job configuration. Once submitted, the training job automatically provisions the necessary Kubernetes pods and sets the appropriate environment variables for the torchrun CLI, enabling distributed PyTorch training to begin. - Tensorflow Job:Make sure the image used and your training script can rely on environment variable `TF_CONFIG` that our training job sets for distributed training. Your code should be prepared to parse these configurations and adjust the training process accordingly. - MPI Job:Install your training code and any required dependencies (e.g., Python libraries, system packages) directly in the image. Confirm that all necessary scripts and entry points are present so the MPI launch command (mpirun or equivalent) can start your program. The environment variables you rely on are primarily those provided by the MPI runtime itself (such as Open MPI) such as `OMPI_COMM_WORLD_RANK`,`OMPI_COMM_WORLD_SIZE`. The `mpirun` command (initiated by the launcher pod) distributes tasks across the worker pods and sets environment variables that enable each MPI process to understand its role within the cluster. |

| No. of workers |

Specify the number of workers that you need to run your distributed training job. (Subject to availability) |

| Computing Resource |

Select the machine that you want to use, by specifying the type of GPU and the number of GPUs on each worker machine. (Subject to availability) For more information, please refer to Specification List. |

| Shared Volumes |

Specify an existing volume that was created previously to persist your data For more information, please refer to Volume. |

- After the setup is finished, click "Launch" to submit your training job.